Technology is both a saviour and a headache. In the past couple of years vast numbers of office workers adopted remote working during Covid lockdowns, while organisations of every kind began to market their products online. Virtual meetings became the norm. Everyone, everywhere, learned to live and work in a more remote world.

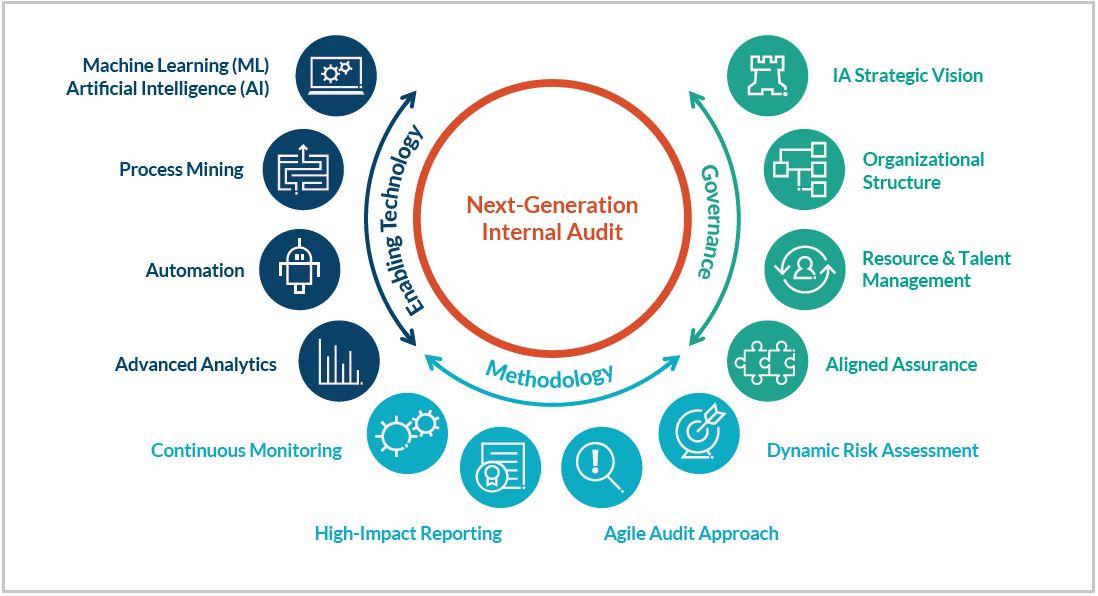

While some find the opportunities technology creates endlessly exciting, others (often those responsible for monitoring, controlling and offering assurance that it is used safely, ethically and in a way that conforms to regulations) find it incomprehensible and threatening. Many internal audit teams have struggled to find people with IT audit expertise for several years and are now under increasing pressure to use more technology, more effectively, in their audits. The rapid emergence of new developments in technology, from AI to blockchain, adds to their concerns.

The complexity of auditing emerging technology in a new digital age, however, should not be overstated. Tried and tested internal audit processes and methodologies are not obsolete. Internal auditors need to understand what existing and emerging technologies do in their organisations and what they will do in the future. They also need to be aware of the potential risks and assurance gaps.

However, they can do much of this without specialist IT skills – as in all internal audit engagements, curiosity, imagination, and the ability to ask the right questions of the right people are key. External support can be brought in for specialist areas, but all internal audit teams are likely to engage with emerging technology in some form and should take time now to think about what this means for their business and for their audits.

Emerging technologies

Most internal audit teams are becoming familiar with auditing technology that is enabling remote-working and well-established corporate IT systems, and many are starting to use data analytics and Big Data to inform their audits. However, it is now important to watch the emerging technologies that are still relatively uncommon but are likely to develop rapidly. Internal audit needs to stay one step ahead of any risks or assurance gaps these create.

A recent poll of attendees at a Wolters Kluwer webinar on emerging technology found that 20 percent said their organisations were using robotics process automation (RPA), 12 percent were using artificial intelligence (AI) and 3 percent were using blockchain technology. Significantly, half of the attendees said their organisations were using none of these as yet – although 15 percent said they were using more than one. Given how rapidly technology use advances, this indicates that it is essential for internal audit to understand what these mean for their business, but that most still have time to get on the front foot.

“There are myriad emerging technologies, some of which are very new and some that have been around for a while, some that will become widespread and others that are likely to be relevant primarily in particular sectors, such as autonomous vehicles,” explained Jae Yeon Oh, presales consultant, UK and Ireland at Wolters Kluwer TeamMate, who presented the webinar.

Other examples include everything from virtual reality, internet of behaviours, internet of things, bioinformatics, and natural language processing to quantum computing and 5G. The most commonly used and best established are RPA, AI, and blockchain, so these are the ones that internal auditors are most likely to have to audit in the near future.

Internal auditors may be required to assess the strategic decision-making process when an organisation adopts an emerging technology, but this audit work will be similar to that for other large corporate decisions. The main challenges for audit are to assess any new risks that an emerging technology brings into the organisation once it is adopted, and how management monitors and controls these risks. The internal audit team, therefore, needs to understand what the technologies will be used for, how they will be used, and by whom.

Common risks with RPA

In the case of RPA, which is used to automate frequently repeated processes that are vital for everyday business, risks include the selection of inappropriate processes, incorrect configuration, unexpected costs, security, inadequate performance, and change management.

For example, one use of RPA could be a chatbot designed to filter common questions from customers. Incorrect configuration could cause the bot to delay passing customers, who need further help, to a human contact, alienating customers. Inappropriate processes could mean that a bot is employed to deal with questions that could indicate fraud or involve sensitive information and require individual thought and attention.

Similarly, an RPA system may incur unexpected costs if, for example, a bot replaces call centre staff, but then requires specialist maintenance and more skilled and expensive people to manage it. Other considerations for internal audit would be whether a bot handles sensitive data that comes under privacy or other regulations and whether it regularly connects to organisations beyond the corporate firewall, creating new risks of breaches or misused data.

The sheer volume of data passing through an RPA system may require new safeguards and checks. And, at the other end of the spectrum, it’s important to monitor whether the system works effectively – can it connect to all the internal systems that it needs to if it is to come up with meaningful, accurate answers to questions. Internal audit could also look at whether the IT team is sufficiently experienced and has the training and resources to manage it.

Management of an RPA system may also be a risk. If it is used to automate an area where frequent changes are implemented then it may necessitate extra layers of processes each time this happens, which adds time, complexity and the risk that people will cut corners.

Common risks for AI

AI comes with another list of new risks. Security is important – the more data the system uses, from more sources, the more entry points and connections are formed and the greater the potential risks. There may also be physical risks if, for example, an organisation uses AI in products such as autonomous vehicles, or to identify when heavy machinery requires maintenance. AI may also be used to diagnose medical conditions. If it is badly configured or malfunctions, it could harm people before the problem is spotted.

Some risks overlap with those for other types of emerging technology – data privacy, for example, is likely to be important when using AI. Internal audit should check that the data used and shared has the necessary explicit consent from data providers. Is this correctly configured and adequately controlled?

There have also been cases where AI systems have been primed with data that leads to inherent bias. If a system is designed using data collected over a long period and is configured to make decisions based on prior rationale, it is likely to make like decisions which may reflect observed human biases from across this period. This is a risk not only that a company will shortlist the wrong candidates but also suffer reputational damage and possibly legal costs. Internal audit should check how this is being monitored and whether bias is identified, managed, and corrected.

Internal audit should also ask questions about how the AI system can be adapted if external circumstances change radically. AI is designed to evolve and adapt, but it will do so within the original parameters. If the world changes quickly, as it did when the pandemic began, it may need new parameters.

Deliberate misuse needs to be considered as well as accidental failings. The more powerful and connected a system is, the more destructive it can be if it is misused, and this could put at risk trade secrets or plant operations and security.

Common risks for blockchain technology

The strengths of blockchain can also be its weaknesses. The inability to reverse transactions and to access data without the required keys make the system secure, but also mean that organisations need specific protocols and management processes to ensure that they are not locked out and have clear contingency plans.

Blockchain depends on interoperability – it must be able to interface with multiple internal and external systems. Internal audit needs to gain assurance that it can do this and that it is therefore working properly. Operating through network nodes could also expose the organisation to cyber-attacks and data hacks, so security issues are important.

Internal auditors should also ensure that the organisation has the necessary data management processes and complies with regulations. The regulatory landscape is still evolving for blockchain, so audit teams should check that compliance managers are following developments constantly and adapting processes accordingly.

Further risks stem from the fact that the organisation is doing transactions with external organisations that they don’t know – auditors should ask whether this could expose them to, for example, contravening anti-money-laundering legislation.

Scoping audit programmes around emerging technologies

All emerging technologies rely on connections and interactions with internal and external systems. New risks are introduced with every new connection. This is an evolution of risks that internal audit already considers when auditing existing IT systems, but the volume of data and complexity of the systems are new.

In addition, internal audit should be aware of the vital importance of adequate security and contingency processes around encryption and keys.

Some emerging technology falls under existing regulations, for example, over privacy, but much is still unregulated or lightly regulated. Internal audit should expect regulations to evolve rapidly.

Last, but not least, internal audit should continue to monitor the overall health of the rest of the IT in their organisation as usual and not be distracted by their focus on emerging technology. It’s important to find ways to blend the way that old and new IT risks are constantly monitored.

When asked for the most important consideration when planning and scoping an audit of emerging technology, 62 percent of attendees at the TeamMate webinar cited awareness of the risks introduced by technology. Their next most important consideration was the alignment of new technology with enterprise strategy (23 percent), while 10 percent were concerned about the lack of subject matter experts.

Jae Yeon Oh suggests that planning and scoping an emerging technologies audit should not differ significantly from other audits and that, as elsewhere, a risk-based audit process is best-practice. “It’s important to have a holistic view – does the coverage across the audit universe adequately offer assurance over all identified risks and is this in line with the organisation’s risk appetite?” she said. “Planning and scoping an emerging technology audit is not fundamentally different from other engagements, but it may bring in new questions and cause some resourcing issues.”

Among the attendees at the webinar, 36 percent said they currently outsourced or co-sourced support for emerging technology audits. Jae Yeon Oh suggests that the benefits of co-sourcing are that the audit team can learn from working alongside those with more experience. Only 11 percent of attendees had auditors with experience auditing emerging technology and 23 percent admitted they did not audit emerging technology at all.